ACM Comm 2018 03 How Can We Trust a Robot (Notes)

|

How Can We Trust a Robot? |

Contents

How Can We Trust a Robot?

"If intelligent robots take on a larger role in our society, what basis will humans have for trusting them?"

Can a robot reason in an ethical manner consistent with human society norms?

Robots can be hardware or software. If the robot's future behavior is unknown, or unknowable, within limits, where is the basis for any trust?

People

Ideas

- Trust is essential to cooperation, which produces positive-sum outcomes that strengthen society and benefit its individual members.

- Individual utility maximization tends to exploit vulnerabilities, eliminating trust, preventing cooperation, and leading to negative-sum outcomes that weaken society.

- Social norms, including morality and ethics, are a society's way of encouraging trustworthiness and positive-sum interactions among its individual members, and discouraging negative-sum exploitation.

- To be accepted, and to strengthen our society rather than weaken it, robots must show they are worthy of trust according to the social norms of our society.

- What is trust for?

- Self-driving car kills woman in Arizona.[1]

- Robots need to follow, and understand, social norms to earn trust from human society.

- This means a separate robot society, with its own rules, will form. The differences will be interesting.

- When do robots participate in society and not just run errands?

- Its a complexity issue. When robot behavior is complex enough that any average human treats it as a human. Then robots are participating in human society.

- Her [2013][2]

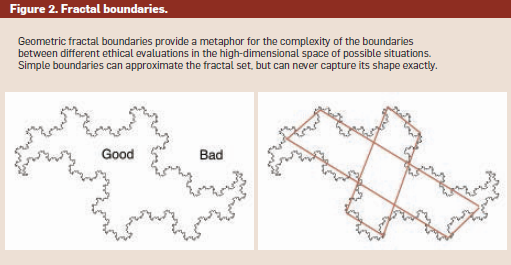

- The performance requirements on moral and ethical social norms are quite demanding.

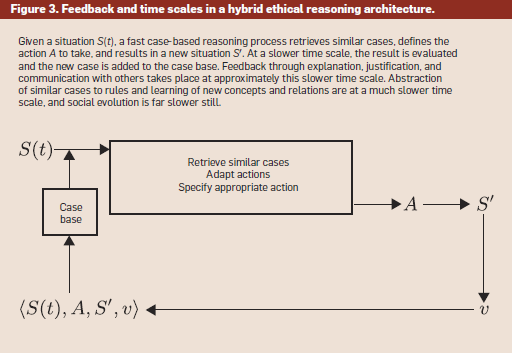

- (1) Moral and ethical judgments are often urgent, needing a quick response, with little time for deliberation.

- (2) The physical and social environments within which moral and ethical judgments are made are unboundedly complex.

- (3) Learning to improve the quality and coverage of moral and ethical decisions is essential, from personal experience, from observing others, and from being told.

- Complicating this even more, robots can share ethical situations along with their judgement in perfect detail with other robots or future robots.

- Three major philosophical theories of ethics

- “Trust is a psychological state comprising the intention to accept vulnerability based upon positive expectations of the intentions or behavior of another.” D.M. Rousseau.

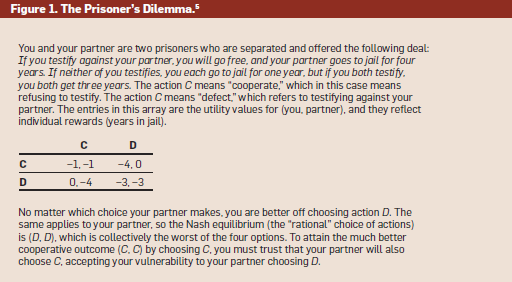

- In the Prisoner's Dilemma the single Nash Equilibrium represents the worst possible outcome for each prisoner and the worst outcome for society as a whole.

- In the Prisoner's Dilemma cooperation produces a better outcome for the prisoners and society, but is not a Nash Equilibrium.

- The Public Goods Game[6] An \(N\) person variant on the Prisoner's Dilemma.

- How can a robot be punished for violating norms, morals or laws?

- Understanding the whole elephant

- The Blind Men and the Elephant[7]

- The design focus for selfdriving cars should not be on the Deadly Dilemma, but on how a robot’s everyday behavior can demonstrate its trustworthiness.

References

- Artificial Intelligence (AI)

- Terminator 2: Judgment Day [1991][8]

- SkyNet[9]

- Robot & Frank [2012][10] In order to promote Frank’s activity and health, an eldercare robot helps Frank resume his career as a jewel thief.

- Burton, E., Goldsmith, J., Koenig, S., Kuipers, B., Mattei, N. and Walsh, T. Ethical considerations in artificial intelligence courses. AI Magazine, Summer 2017; arxiv:1701.07769.[11]

- Arkin, R.C. Governing Lethal Behavior in Autonomous Robots. CRC Press, 2009.[12]

- Leyton-Brown, K. and Shoham, Y. Essentials of Game Theory. Morgan & Claypool, 2008.

- Russell, S. and Norvig, P. Artificial Intelligence: A Modern Approach. Prentice Hall, 3rd edition, 2010.

- The Stanford Encyclopedia of Philosophy[13]

- Deadly Dilemma[14] If a robot car kills a pedestrian to save itself and its passengers, why let it on the road? If a robot car destroys itself and its passengers to save a pedestrian, why buy it? But this dilemma is rare.

- The Near Miss Dilemma is more common. Reasoning actors, human and robot, usually find some third or forth way to avoid an outcome that produces casualties.

- Game Theory[15]

- Nash Equilibrium[16]

- Prisoner's Dilemma[17] See Figure 1.

- Deviance and Social Control: A Sociological Perspective, 2nd Ed. by Michelle Inderbitzin[18]

- Isaac Asimov's Three Laws of Robotics[19]

- I, Robot[20]

- valence[21] new vocabulary word.

Figures

Internal Links

Parent Article: Reading Notes